Calculus for Back Propogation

We look at calculus in the context of back propogation for neural networks.

TODO Basic calculus

The crux of calculus is the calculation of:

\[ \huge f(x + dx) \]

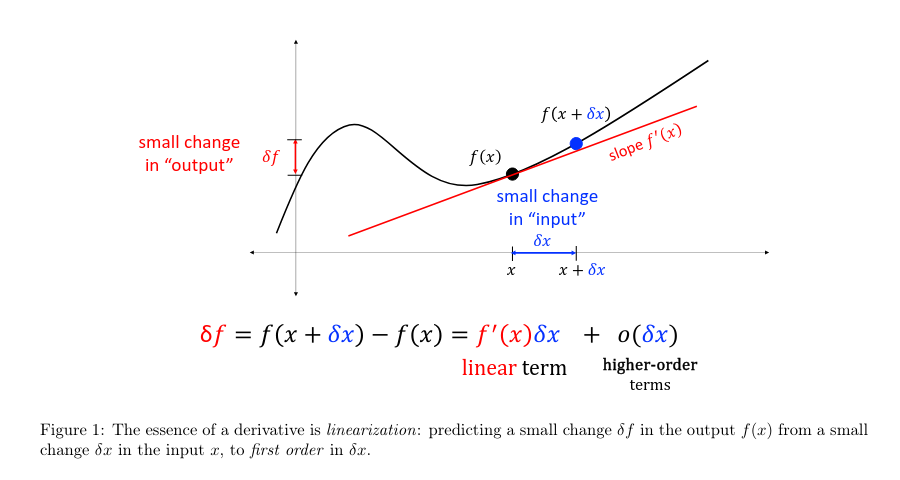

Figure from Matrix Calculus (for Machine Learning and Beyond) (pg 9, Figure1)

Matrix Calculus (for Machine Learning and Beyond) Lecturers: Alan Edelman and Steven G. Johnson Notes by Paige Bright, Alan Edelman, and Steven G. Johnson Based on MIT course 18.S096 (now 18.063) in IAP 2023 pg 9 : Figure 1

TODO Chain Rule

TODO Matrix calculus

This an 'identities' heavy section, i.e. identities that can be derived, but better to have on cheatsheet.

Cheatsheet

Authors: Kaare Brandt Petersen and Michael Syskind Pedersen

Version: November 15, 2012